OpenOffice.org comes in several editions produced by different groups. Each edition has its own features, performance improvements, bug fixes, and new bugs. Go-oo in particular boasts performance as a feature with its the slogan, "Better, Faster, Freer," but is there truth in advertising? Let's pit four OpenOffice.org editions against each other in a scientific speed smackdown.

The four editions are Sun Microsystem's OpenOffice.org (which I call "Vanilla"), Fedora's, Go-oo, and OxygenOffice Professional. If OpenOffice.org had a family tree, it would be a convoluted redneck family. The last three are derived directly from Sun Microsystem's code, and OxygenOffice is derived from (or part of) Go-oo. Some (but not all) patches in the derivatives go back upstream to Vanilla.

Benchmark test environment

The test system is a modest computer from several years ago.

- Operating system: Fedora 9 i386 with Linux 2.6.26

- CPU: AMD Athlon XP 3000+ (32-bit)

- RAM: 768 MB, DDR 333 (PC 2700)

- HDD: Maxtor 6Y080L0, IDE, 7200 RPM, 80 GB

- Video: Via VT8378 S3 Unichrome IGP at 1024x768

Benchmark test procedure

Like previous benchmarks, this OpenOffice.org benchmark uses automation to precisely measure the duration of a series of common operations: starting the application, opening a document, scrolling through from top to bottom, saving the document, and finally closing both the document and application. Automation is much more precise than a human with a stopwatch, and with the small durations in these tests, automation is necessary. Each of the five tests are repeated for 10 iterations. Before each set of 10 iterations, the system reboots. The purpose of rebooting is to measure the difference in cold start performance where information is not yet cached into fast memory. A reboot marks a pass, and there are 15 passes. That means for each edition of OpenOffice.org, there are 150 iterations. Multiplying by 5 tests and by 4 versions of OpenOffice.org yields 3000 total measurements collected for this article.

Only OxygenOffice enabled a quick starter by default, and as promised in the Microsoft Word benchmark, I disabled the OxygenOffice quick starter to give all editions a level playing field.

Benchmark results

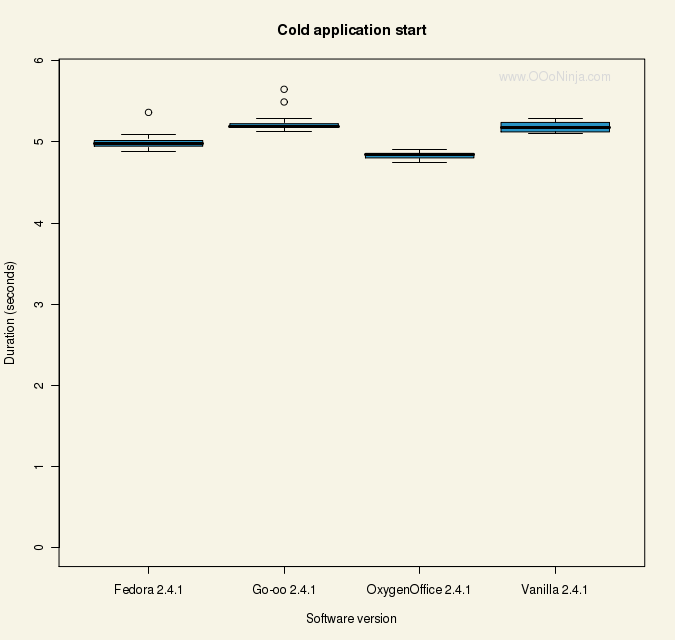

The single most important test is cold start performance—how fast the application starts the first time after the computer reboots. Go-oo's home page brags about its low I/O, fast linking, and minimized registry access contribute to better performance. Is it enough for Go-oo?

What a close race! The cold startup performance results range from 4.83 seconds to 5.24 seconds, but for all the smack talk, Go-oo came out fourth out of four. Why? There could be lots of reason. First, Fedora can link to more system libraries, meaning it can share more code with other running programs, so Fedora doesn't need to include its own XML parser, pieces of Mozilla, etc. Second, Fedora is compiled using the latest GCC version 4.3, while OxygenOffice was built with GCC 4.1; Go-Oo, 3.4.6; and Vanilla, apparently 3.4.1 and 2.9.5. Third, Go-oo has more features and is 49% bigger than Vanilla. On the other hand, OxygenOffice is also large. Finally, Go-oo and Vanilla do not use the Hashstyle (also called DT_GNU_CASH or .gnu.hash) technology developed by Go-oo hacker (employed by Novell) Michael Meeks CORRECTED (twice) Red Hat based on research by Michael to accelerate linking (which happens early in a program's startup): only Fedora and OxygenOffice use Hashstyle.

It may be hard to tell because the benchmark results are so consistent, but most charts are illustrated as box plots. Lower is always better.

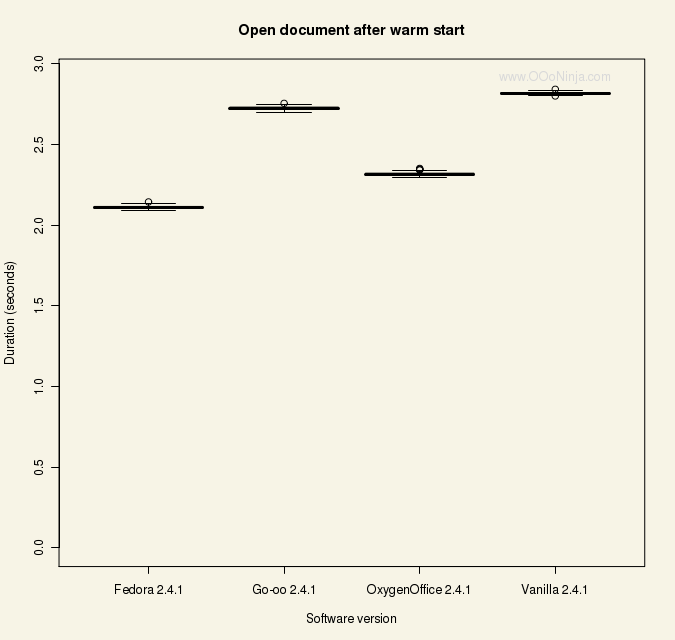

What about application startup after a warm start? (A warm start is starting the application a second time after a reboot when the disk cache speeds up loading.) Here OxygenOffice comes in a close second after Fedora while Go-oo and Vanilla practically tie for more distant third place. The difference between all cold and warm starts is about 4 seconds, which is consumed by slow hard disk drive technology, while new solid-state storage devices would see faster performance.

The second most important metric is opening a document, so I tested reference document ODF_text_reference_v3.odt. In colds starts the variation was significant: last-place Go-oo finished 27% slower than first-place Fedora.

The results for warm starts are similar but 5-6 seconds faster.

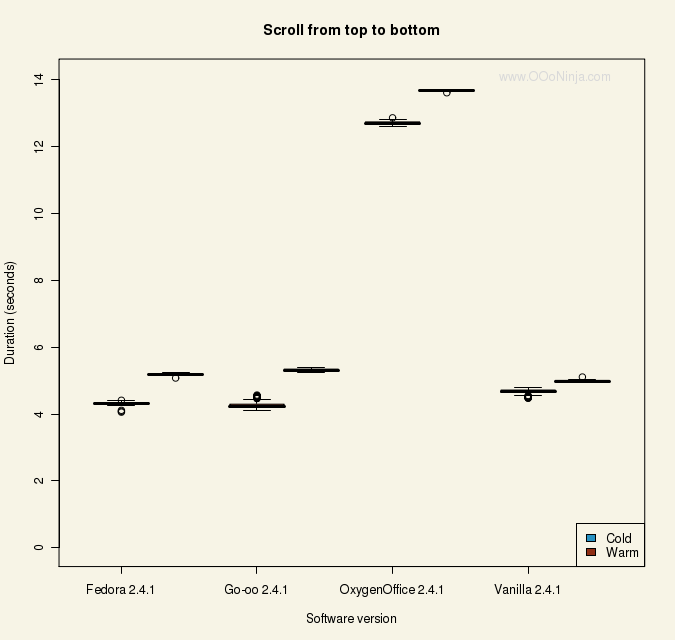

The scrolling test involves moving down each line from top to bottom, and three editions scored similarly except OxygenOffice which did shockingly bad. The scrolling benchmark is the most artificial and least important.

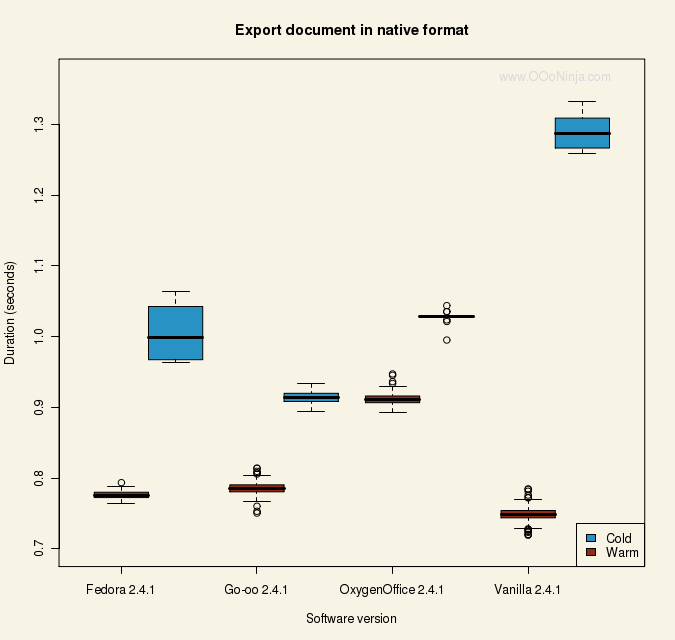

In exporting a document, Vanilla comes in both first (after warm start) and last (after a cold start). Here Go-oo picks up its only meaningful win, and it wins the race to export the document after a cold start. This chart does not start at zero because it would otherwise be too small to read.

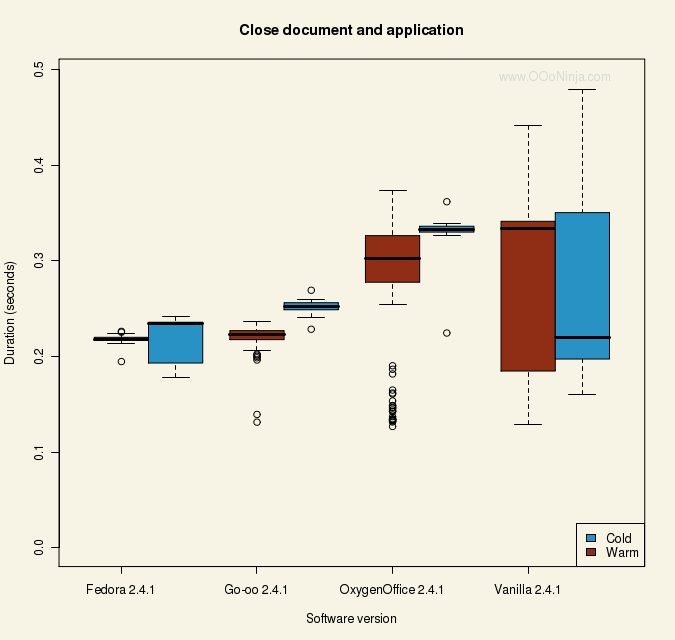

It does take a moment to close OpenOffice.org.

In the end, Fedora OpenOffice.org scored the fastest performance by a significant margin, and OxygenOffice took home a strong second place. Go-oo barely beat Vanilla for third.

Scrolling and closing were excluded from the totals because people generally don't wait for them like they do for starting the application, opening a document, and saving the document.

11 comments:

It should hopefully be little surprise that the edition of OOo that was specifically compiled for Fedora works better on Fedora than versions compiled generically. Of course that means little to anyone not using Fedora...

Certainly brings to light the value of free licensing when it comes to optimizing performance.

Alan: I was surprised. I have been following the development for a while—for example reading the blog of Michael Meeks (a primary Go-oo developers) for a few years. Go-oo advertises performance and has more performance-specific patches. Fedora OOo 2.4.1 only has one performance-specific patch. Also as I mentioned, Go-oo does not use the Hashstyle technology developed by Go-oo hacker Michael Meeks!

It's not feasible to test on all distributions, but one day I would like to repeat the test on Ubuntu.

As you point out, you can't recompile Microsoft Word for Windows, but Windows is more consistent (available .DLLs and their versions, for example), so there is also less need.

I wonder how long it takes until Michael Meeks responds to this test, whether it be here or on his own blog. I, too, have been a long time reader of Michael Meeks' blog as well as yours.

Anyways, I would be curious to see this comparison of performance testing done on Windows for the different OOo versions. I would love to see this if you could pull off this testing on Windows.

Keep up the great work with your OOo articles.

Thanks,

Dave

go-oo has a nice quickstarter applet.

It does not take much memory as well.

Have you tried enabling that ? If not, do it, after this, my OO is rocket fast.

Would be interested to see how OOo 3.0 compare to 2.4 on this tests.

Hi Andrew,

Interesting write-up ! The fact that the go-oo generic builds do not use the --hash-style=both work, is basically a simple screwup by the packager: which we will fix [ I guess OxygenOffice simply fails to get that wrong, while using the same code ]. If you compared the native SUSE package to the native Fedora package on it's own distro I suspect you would find it a far closer match (and perhaps a fairer comparison :-)

As for the provenance of --hash-style=gnu : yes the final implementation was done by Jakub from RedHat [who have a hold on glibc development], but the research, measurement, prototype, and design input were provided by me.

Also - I suspect you fail to notice how much of our work has already gone up-stream; Sun have integrated at least a large proportion of our work. I will have to re-do my performance measurements for the go-oo website when 3.0 comes out to see how we do there.

Anyhow, was not aware of your work - I love the performance comparison over time paper - that is most interesting: it seems I'm fighting a loosing battle ...

HTH,

Michael.

It would also have been nice to see StarOffice and Lotus Symphony tested.

ı have followed your writing for a long time.really you have given very successful information.

In spite of my english trouale,I am trying to read and understand your writing.

And ı am following frequently.I hope that you will be with us together with much more scharings.

I hope that your success will go on.

I wonder how long it takes until Michael Meeks responds to this test, whether it be here or on his own blog. I, too, have been a long time reader of Michael Meeks' blog as well as yours.

Anyways, I would be curious to see this comparison of performance testing done on Windows for the different OOo versions. I would love to see this if you could pull off this testing on Windows.

komik oyun: Michael responded right away. There is a link at the bottom of the page to his blog. Also, I think there was a discussion on the go-oo.org mailing list, but it's been a long time.

Can you provide the automation script that you've used to measure the performance?

Post a Comment